The original title of this blog would have been “AI in Law: A Year of Hype, but Where's the Progress” if not for the launch of Deep Research.

From Bill Gates to JD Vance, law is frequently mentioned as a field primed for AI transformation. The promise? Smarter legal research, faster contract drafting, and justice for all. The reality? Well, the results were underwhelming.

In an effort to update the course materials for Generative AI for Legal Professionals, we tested the same ~50 legal use cases that we used a year ago against the most up-to-date Large Language Models (LLMs): ChatGPT, Gemini, Claude and Copilot. The results were so unimpressive that we saw no reason for an update. AI has been making significant strides in coding and math, but when it comes to legal tasks? Meh.

Then, Deep Research dropped. Our team’s first reaction? “Wow!” “Amazing!” “Unbelievable!”

The shift was so dramatic that I scrapped my original blog and asked the team to embark on a data-driven evaluation. Is Deep Research a game changer? Here is a summary of our findings.

Summary of Findings

The results from Deep Research are significantly better than those from the regular models across all tools.

Among the three tools tested, ChatGPT secured a lopsided victory, ranking significantly higher than its peers in comprehensiveness and depth of the analysis.

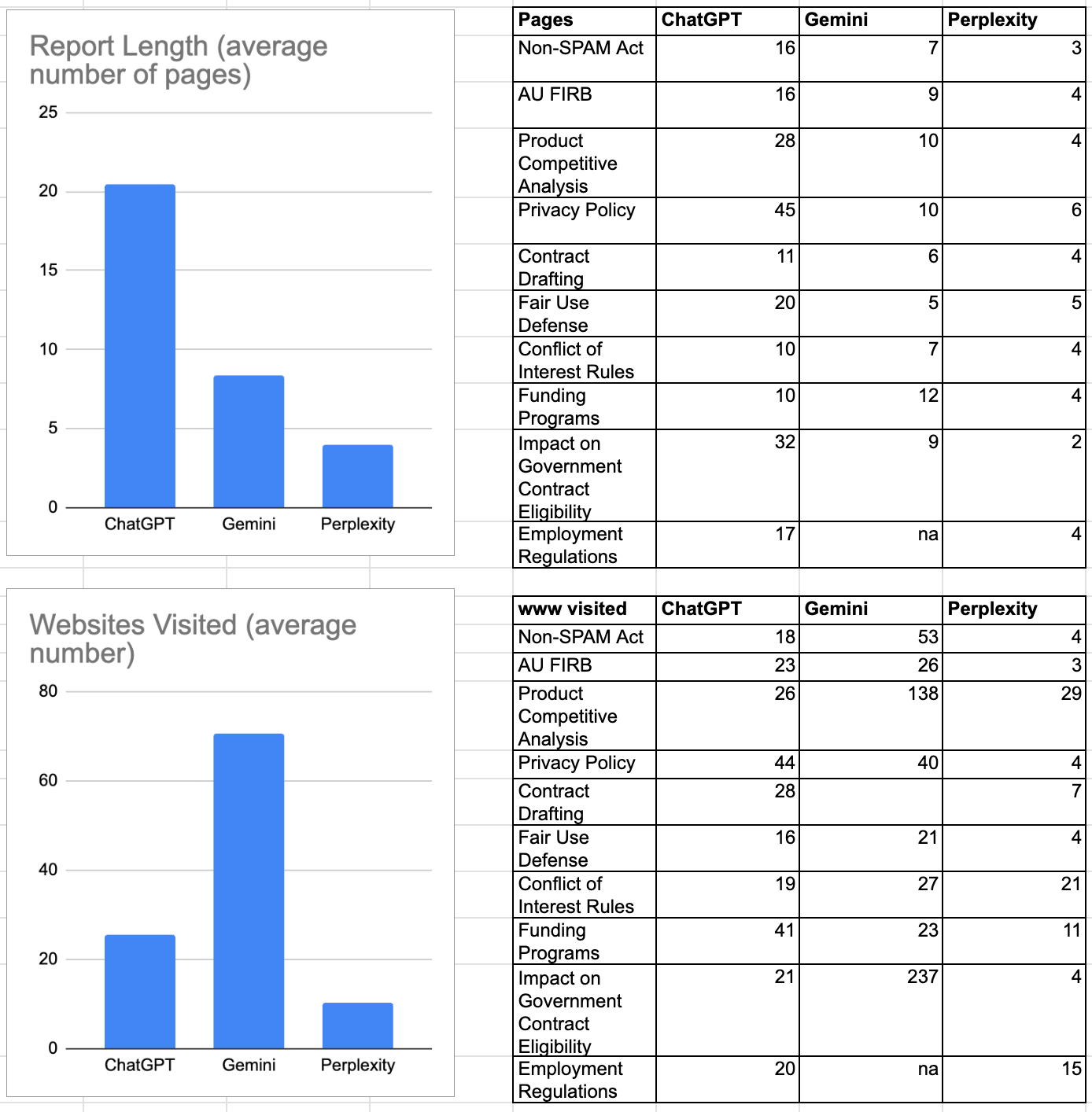

Comprehensiveness comes at a cost. ChatGPT reports range from 10 to 45 pages, making them challenging for human comprehension and validation.

Senior lawyers rank ChatGPT 1st in 8 out of 10 topics, compared to 7 by the mid-level lawyer and 5 by the junior lawyer.

Perplexity’s brevity and clarity on recent legal and regulatory changes win it 1st place in 2-3 topics, while its peers delve on older cases and regulations.

Gemini ranks an awkward middle or the last in all topics by senior and mid-level lawyers, making us wonder why pay for Gemini if Perplexity is free.

Gemini cites the most sources, from 23 to 237 websites, compared to an average of 25 for ChatGPT and 10 for Perplexity. More is not always better!

Even the best results cannot replicate sophisticated legal advice that allows clients to take on calculated business risks.

$200/month for ChatGPT’s long and deep vs. $0/month for Perplexity’s concise but limited responses, 3 out of the 4 lawyers opt for the latter!

Now let’s dive into the details.

What Is Deep Research?

Deep Research is a new feature introduced by Google, OpenAI and Perplexity. If you are a paid user of ChatGPT Pro ($200/month) or Gemini ($19.99/month), simply select “Deep Research” mode before asking your question. Perplexity took advantage of the recently released DeepSeek open source model and leapfrogged its competition by offering Deep Research for free.

This new feature doesn’t just “do the googling” for you – it handles all that comes after. Unlike the “older” AI, Deep Research actively searches for, reads and connects information from tens or hundreds of websites. It then produces a detailed report on complex topics while providing citation for each sentence when applicable. Hours, days or even weeks of human activities can be captured in 5-10 minutes. Like a gaming streamer on Twitch, Deep Research “narrates” its steps on a side panel while executing the task. See an example of such steps here.

How Does It Work?

Perplexity claims that its Deep Research “reasons about what to do next, refining its research plan as it learns”. OpenAI claims that its Deep Research “learned to plan and execute a multi-step trajectory to find the data it needs, backtracking and reacting to real-time information where necessary.”

Simply put, in response to a human request, Deep Research can follow tens to hundreds of steps on its own and adjust its steps based on content it reads from the sources. It also likes to show off while doing it. The results are promising. Media coverage exploded with reports of Deep Research demonstrating capabilities approaching those of a PhD student – only much faster.

Evaluation Methodology

Intrigued by the hype, we conducted a data-driven evaluation using three leading Deep Research tools: ChatGPT, Gemini, and Perplexity.

Number of Questions Asked: 10, ranging from conflict of interest rules to fair use defense to copyright infringement claims. See the list of topics below.

Number of Reports Evaluated: 30 (3 for each of the 10 questions).

Number of Evaluators: 4 lawyers.

Two senior lawyers with 25+ years of experience, splitting review responsibilities based on areas of expertise.

One mid-level lawyer with 18 years of experience.

One junior lawyer with 2 years of experience.

Scoring: Each lawyer independently ranks the three reports in response to each question as 1st, 2nd or 3rd.

Qualitative Review: Each lawyer also provided qualitative feedback for their scores.

Findings

ChatGPT is the overall winner.

The result was a lopsided victory for ChatGPT, voted No. 1 in 7 out of 10 cases. Check out example results here.

Perplexity demonstrated a concise, focused approach, delivering direct answers without excessive elaboration, but the concise answers sometimes came at the expense of missing important points.

Gemini landed in an awkward middle—neither comprehensive nor succinct. At times, it provided accurate insights, but other times, it missed the mark, leaving responses feeling inconsistent and unfocused.

ChatGPT provided comprehensive, detailed responses with extensive context and analysis, but it was at times verbose, repetitive, and unable to synthesize the information concisely.

Number of sources consulted does not correlate with quality and depth.

Surprisingly, even though OpenAI’s reports were the longest, Gemini had consulted the largest number of websites to draft its reports. The disparity between the number of sites visited and the length and quality of the reports might be attributable to ChatGPT’s ability to choose higher quality sources or its sophistication in processing its sources for drafting more nuanced and substantive reports.

3. Senior lawyers prefer ChatGPT due to its nuanced analysis.

ChatGPT’s edge, however, varied depending on lawyers’ years of experience. The longer the lawyer's experience, the higher they ranked ChatGPT. The junior lawyer appreciates a more straightforward and brief response, while more experienced lawyers appreciate the more nuanced analysis despite the verbosity. ChatGPT outperformed other tools in only 50% of the cases when ranked by the junior lawyer, compared to 80% by senior lawyers and 70% by the mid-level lawyer.

4. ChatGPT’s lengthy results pose challenges for human comprehension and validation.

One major downside of ChatGPT is that the sheer length of the results makes human review and validation extremely challenging. In one instance, ChatGPT pulled the first part of a clause from a precedent agreement (with citation) and replaced the second part with new language. While the new language is technically correct, this creates potential risk of mistakes or oversight. Such mistakes would require significant legal expertise to catch. In other reports, key words were randomly omitted; uncustomary examples were included in a contract.

The length of the results, coupled with hard-to-detect mistakes, raises concerns about the practicality of using Deep Search to draft long-form contracts or court filings, where the accuracy requirement is very high. Such risk would be lower in generating generic legal research or short-form documents.

5. Deep Search cannot replace sophisticated legal advice.

While Deep Search can efficiently generate well-structured legal research, contracts and policies, it likely won’t replace the sophisticated judgment of experienced lawyers. For example, the legal advice given on the Conflict of Interest Rules is conservative, which may not be in line with the requirements of clients who are ready to take on calculated business risks. My personal observation is that most AI-generated legal advice leans conservative, resembling the cautious approach of a junior lawyer rather than the nuanced, risk-balanced perspective of a seasoned practitioner.

5. Is ChatGPT’s 10x the price worth it?

Considering today’s prevailing legal fee of $500-2,000 per hour, $200 per month seems to be a bargain. However, when presented with the choice between $200/month vs. free, 3 out of the 4 evaluators chose free.

Conclusion

Just a week ago, I was ready to declare that no meaningful progress in AI’s capabilities was made on legal tasks during the last year. The arrival of Deep Research is a promising step forward, but I don’t see it as an immediate game-changer. The technology shows real improvements in synthesizing information and generating sophisticated research reports, contracts and policies, but challenges remain in accuracy, validation, and practical usability. AI will undoubtedly continue evolving, but for now, it remains a tool that requires legal expert oversight, not a replacement for sophisticated legal expertise.

Acknowledgement

I want to thank Nick Abrahams, Ben Hendrick and Behrang Seraj, whose generous contributions made this report possible.

Agree that Deep Research is very impressive for most legal research questions. Understandably, it struggles with anything very new or novel. But it also does, once in a while, get some stuff wrong, and because it's mostly right, it's actually very hard to determine when it makes a mistake or misses something. Not sure yet how best to quality check a 30-page memo from Deep Research without redoing all the work. Stay tuned on that.